Proxies and their role in the SEO world seems to confuse quite a few people. This makes finding the best place to buy private proxies and not get ripped off quite difficult. Since most proxy providers come off a bit shady regardless of their legitimacy, it becomes difficult to find the best solution out there. Because let’s face it, most activities that go along with proxies aren’t very legitimate.

Proxies and their role in the SEO world seems to confuse quite a few people. This makes finding the best place to buy private proxies and not get ripped off quite difficult. Since most proxy providers come off a bit shady regardless of their legitimacy, it becomes difficult to find the best solution out there. Because let’s face it, most activities that go along with proxies aren’t very legitimate.

I have personally used almost every proxy provider in the game but still wanted to run a comparison test and put any confusion to rest. For the test I purchased brand new fresh sets of 10 private proxies from the top 4 providers out there. Using ScrapeBox, I ran the exact same sets of queries on the same settings and thread count to determine which proxies were the absolute fastest.

Then I turned up the heat (thread count) to see which would get banned the quickest. Before diving into the actual experiment and resulting data, let’s quickly go over exactly what private proxies are and what their use is for the noobs out there.

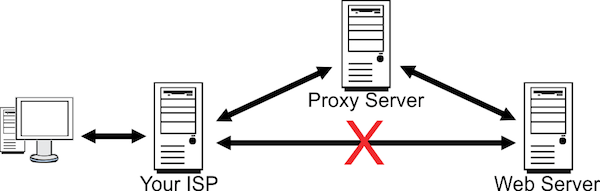

What Private Proxies are and why we need them for our SEO Shenanigans:

The world of proxies has always been a bit of a grey area and most providers tend to look the other way with whatever shenanigans happen to go on with their proxies.

That is the beauty of proxies, you can do things you would otherwise not be able to accomplish from one IP. Proxies enable the SEO to simulate requests for running searches or registering multiple accounts appear to be coming from different locations, thus making them seem unique.

That is the beauty of proxies, you can do things you would otherwise not be able to accomplish from one IP. Proxies enable the SEO to simulate requests for running searches or registering multiple accounts appear to be coming from different locations, thus making them seem unique.

In reality though, it’s your neighborhood SEO scraping big data along with all kinds of other weird shit.

The applications for proxies are quite extensive, but these are the main purposes:

- Harvesting data from Google

- Registering multiple accounts on the same site

- Running Scrapebox

- Running GSA Search Engine Ranker

- Checking search engine rankings

- Various types of spamming in online communities

- Malicious activities using proxies for anonymity

Ok now that we’ve got that out of the way, let’s move onto my analysis.

Here are the top providers I compared purchasing a set of 10 private proxies from each in descending order of price:

SquidProxies.com– $20

PowerupHosting.com – $20

Buyproxies.org– $20

MyPrivateProxy.net – $21.33

After signing up, all of the proxies were live and active within about 30 minutes.

My first area of testing was to run the same set of queries with each proxy set and time it.

I set to retrieve 100 results per query with max connections at 1 considering the small set of 10 being used (typically I scrape with 100).

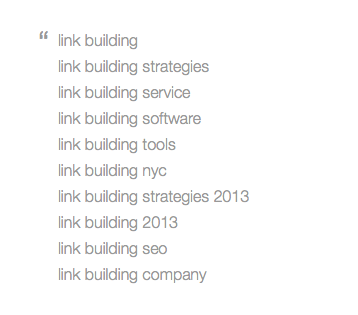

Here are the keywords I used which all have at least 100 results.

The results are pretty interesting for harvester speed.

1st Place – Squidproxies.com – Scraped all 1,000 URLs in 0:42 seconds

2nd Place – PowerupHosting.com – Scraped 985 urls in 1:02 seconds

3rd Place – BuyProxies.org – Scraped all 1.000 total URLs in 1:22 seconds

4th Place – Myprivateproxy.net – Scraped only 700 urls in 2:42 seconds

SquidProxies takes it as the best proxy service with speed and overall efficiency.

Yes, I was quite blow away as I was previously using BuyProxies for the last year or so. After the initial results I even waited a couple hours and retested and averaged the times. They were very close. We also need to keep in mind that these guys often provide different sets of proxies which vary in quality. So you can usually file a ticket and get a replacement set which would change my results, this is just based off the first set I received.

The final test was to pummel the shit out of these proxies with the site: search operators and see which could withstand the heat for the longest.

Those and inurl: tend to piss Google off the most so it’s perfect for intentionally getting the proxies banned.

The results of the proxy beatdown session:

Poor testing method.

The faster proxies were banned the quickest since they checked more urls in a shorter time frame. The slower proxies take for ever to load each thread so the banning takes a bit longer. This is the only conclusion I can draw, if you know more about this, please comment below.

Could you have tested the total urls returned with site: or inurl: rather than the speed, as surely whichever returned the most results across a set of sites would have been banned after the most queries?

Hey Jonny, yeah I just thought of a way to test them for durability.

I’ll harvest a bunch of urls, then run the Google index check. We’ll see how many urls it confirms as indexed before they get banned. I’ll update shortly.

HI Jacob I am new to your blog but when i read some of your articles i am very much excited .I am new to the SEO World but i am interested to know the techniques of white hat SEO .Coming to the point that you have mentioned in the article by using proxy servers can we achieve the rank in Google .If so please mentioned how to do it .

waiting for your reply

On Squidproxies the $24/month plan does not give you the option to have proxies in multiple cities. Do you think that would be an issue or tip off Google while Scraping?

You know I’m not 100% sure on that, I will send a message to SquidProxies support.

Hey man, Thanks for this. Another nice piece of work.

Surely though there is a trade off between speed and getting banned. If they are fast and getting blocked by Google more frequently, then surely the slow and steady will work out the same in the long run due to the fact they all still work?

Yeah I hear that, I’m going to play with it some more. I think the problem might be trying to get them banned and run a legit test with such a small amount.

The harvester test runs just fine, but I used the Google index check to test their durability. Reason being if you don’t have the threads set properly this will get your proxies banned. I know because I have done it many times. It’s always been temporary ban for me though.

The problem I’m seeing is that even with 100 proxies, the threads don’t have to be that high to get them banned. If you use 1:10 they will get banned for sure. So if you took say 50,000 urls, set 10 threads on the index checker with 100 proxies, they would start to get banned. I haven’t run the index checker in a while (I use inspyder for that) but I think it was around 1:20 or even lower to maintain index checking without getting proxies banned.

So for testing the sets of 10, I set at 2 threads which made a 1:5 ratio, way to high to run at all. Any ideas on a different method for intentionally banning the proxies?

How about the Google competition check? I’ve had trouble with that in the past when not on the right settings.

Hi Jacobs

Sorry is proxies a white hat or black hat Seo Strategy.

Thanks

Um neither. proxies arent a strategy they are a tool.

Thanks Jacob, thinking to buy SquidProxies, will check them from my side :)

No prob Rajesh, they should work out great.

…how on earth did you find a pic of what I will look like in 20 years above?!?

The benefits of not being able to move, is that it requires correct sitting posture…

Hi Jacob,

Great inspirational article! Looking forward to do some testing with my dutch website. What are good proxy services in The Netherlands? Do you know any?

Thanks!

Huh?

In your article you talk about where to buy the best private proxies. As these private proxies are all not from the Netherlands, I was thinking I should use some Dutch ones for my seo purposes. Am I wrong? And if do need some Dutch private proxies, do you where to buy those because I can’t find them… :(

Your help is much appreciated Jacob! Thanks! :-)

Hey Buddy, the geographic location of your proxies shouldn’t matter too much for your SEO submissions.

Since the proxy is just helping you facilitate the registration and submission, using US proxies while in the Netherlands should be just fine.

The one thing to consider is you’ll be scraping Google US I assume which you should use U.S. proxies for.

To save 10% off the monthly cost, be sure to grab up this discount – https://www.jacobking.com/squidproxies-coupon

Hi Jacob, so if I understand correctly using private proxies from the U.S. for SEO submissions wouldn’t be a problem. However, when I want to scrape Google NL to harvest some URLs, I really need a private proxy from the Netherlands. Is that correct?

If so, I really hope I can find one, cause they seem really difficult to find. If you have any suggestions for that, please let me know.

You know I haven’t done too much scraping of Google other then Google.com

You’d have to test it but I’m thinking it would be fine, people use different Google. while in the U.S. all the time so it makes sense.

The only thing I would avoid is some foreign proxies nailing Google.com like crazy, that might get proxies banned quicker than using US proxies.

Give it a shot with 10 US proxies to test.

Ive used squid proxies for south african scraping and it worked out perfectly, im actually looking to renew my service with them. Sobim sure your dutch scraping should work out fine

Thanks Jacob. I will do so after next week, as this week I’m having several job interviews. Once I have some decent result on the proxies, I’ll let you know the outcome.

I got 10 proxies from squidproxies and they get banned by Google when scraping Google.nl results. I put the settings to 1 connection and delay of 2-5 minutes.

Did you manage to succeed in The Netherlands?

Hey Erik, it’s really tough to do much scraping with 10 proxies tbh.

I usually scrape with 100 at 5 threads, now you don’t need 100, but the ratio should be similar. 10 proxies only allows for a minimum, 10:1 ratio of treads to proxies.

Hi jacob how many results do you get per 10 minutes scraping with 100 private proxies from squidproxies when you want to scrape 24/7?

I want to know a rough estimation. So for example are 6000 results normal after 10 minutes or, 60000?

It normally sits around 175 urls/second I believe. Been drinking, hard to think right now.

Hi Jacob, I get everytime banned with my squidproxies when I try to scrape big lists. I even did a total of 1 connection for all my 25 proxies. So 1 connection in total for 25 proxies. Still got banned after an hour scraping.

What are you doing different? Because you can scrape 24/7?

What do you have the RND delay set at? Try increase the lower number, I think default it’s set at 1, increase to 3. This will create a longer delay between queries.

Yeah, I tried also some settings with this. My last test was one connection and RND delay set at 10 seconds. Still got banned unfortunately:(

Shitty, I’d submit a ticket to Squidproxies and they’ll give you a fresh set.

Well I tried 5 different sets and google keeps hating me:(. Now Im scraping with buyproxies, but they are much much slower. Like you found out in the test. I used your test to go with squidproxies, they are the fastest with ease, but they are no marathonrunners

Buyproxies goes on avg with 9 urls/s while squidproxies do 22/25. With 1 connection.

I really should have tested the durability more, it was tough with only 10 proxies tho.

Please post back with how this plays out, thanks Hans.

Stories like this make me wonder just what goes into being a proxy provider.

Okay I ended my subscription. I have had a lot of contact via chat and email with them and tried a lot of sets of proxies, but keep getting bans.

The techs also took a look into the problem and say they can’t find it. I sent them my list of keywords.. Probably they did just a scrape for 15 minutes or something. Not sure how they tested it.

It made me wonder why you can use 100 proxies with 10 connection and not getting banned. Maybe because there is a more natural use of the proxies when having more. Not sure.

Damn that sucks Hans, if you have better luck elsewhere please post back here. I need correct that ratio, usually I scraped 5 connections with 100 proxies.

Im scraping now with buyproxies (30 proxies), they are much slower. Something like 7-11 urls on avg per second.

Also bought UD a week ago and testing now with that and bought a VPS today via your affiliate link.

But UD is pretty slow. It took 11 hours to test 12000 urls in the site detector. So if you scrape for example 32000 urls an hour = 777600 a day and a lot of results are duplicate, than you remain a list of maybe 100.000 unique urls a day? Then when submitting them all to the link checker of UD I still have to wait days and days before everything is checked by the site detector of UD.

So it’s not that important that the proxies are not that fast I think. So since UD can just check so few sites a day, im wondering why you have that much proxies for scraping? Do you have a lot of different VPS’es run with UD installed on it to check all the sites for their type?

And my 2nd question: Because it takes very long to test all your urls, do you have a special scheme for checking the urls in the site detector and using UD for building links? Because you can’t do both at the same time.

Hey Hans, good questions. I have two copies of UD, one on my desktop that I process sites in. You want to cut down on running crap through the site detector so be sure to develop a filter list containing domains such as blogspot, webs, etc

Those will be a complete waste. The other tweak is to scrape for only one platform at a time, this will greatly improve the processing efficiency of the site detector.

i’m new to all this, Jacob, but i think i need proxies so i can set up a lot of youtube accounts and channels. Each channel will be targeting the same keywords in a local niche, but each in a different US city.

Is this something that proxies would be good for?

Also, I was told by one person that you should do the proxies from one browser, and to run CCleaner each time before you use another proxy.

Any thoughts? Thanks, Paul

Any thoughts? Thanks.

Yes exactly Paul, you would need proxies to create multiple accounts. It is important though, with YouTube especially, to bind a specific proxy to each account creation for return logins.

Otherwise Google will connect all the same accounts under one owner and end your ass.

The other method is to create the accounts, upload vids, spruce it up, then never login again. This is something I have put quite a bit of thought into (YouTube specifically) and it remains a tough nut to crack.

The phone number is the other issue, I read in a BH thread somewhere to use a free text message smart phone app, they have huge lists of dummy phone numbers you can use and receive the confirmation text to verify the account. I sense a lot of black hat forums in your future ;-)

Hi Jacob, Hans here with an update. I have some new insights and questions.

The speed of the UD site detector starts to ignore me. So I bought again proxies from squidproxies, 10 this time. Because I found that when using buyproxies in my internet explorer for example they are pretty slow. They are only good for scraping keywords. So I thought when using buyproxies for UD they must be slower than the lightning fast squidproxies. Also I read everywhere the complaints about the speed of UD, so I thought 10 squidproxies will be enough for UD. It’s more a thing of the speed of your computer, I think.

Speed changes so far with the change from 30 buyproxies in UD site detector to 10 private squid proxies: UD needs now something like 30 hours to detect 45000 sites (when you select all in site detector) and before, 40 hours I think.

But I want to make it faster, so I searched for my computer stats. That are these:

System:

ASUS/ASmobile M2 Motherboard M2N-MX SE Plus

Motherboard Brand:

ASUSTeK Computer INC.

Motherboard Model:

M2N-MX SE Plus Rev x.xx

Processor:

AMD Athlon(tm) 64 X2 Dual Core Processor 5200+ 64Bit

Processor Speed:

2700 MHz

Available Memory:

3072 MB 301.4 MHz CL: 5.0 clocks

Maximum Memory:

4GB

Total number of locks:

2 Socket

It’s DDR2 memory. Do you think when I upgrade my computer to 2x2GB DDR2 800mhz it will be a huge difference in site detector speed? Or is it just a waste of money? What do you think that the speed difference will be? And do you have maybe also other advices about the speed?

Hrmm, hey Hans, maybe you should try playing with GSA SER a bit.

The site detector is kind of a POS in UD tbh.

I run on a 5gb ram vps, runs smooth as butter. But 20k urls will take several hours. This is why I’ve run two copies simultaneously before, one locally scanning sites, and one on VPS running the submissions.

I think with your current setup it should be fine, the additional ram shouldn’t be that significant. Try running GSA SER site detector, that thing hauls ass. Then go to the site list folders and paste those into UD.

I’ve had a SER on a VPS running for about 5 months now. Some suggest that SER be used as just a poster, that scraping of targets is best done by Scrapebox. I take it you disagree.

Do you know of a video detailing how SER can be used just as a scraper?

No I agree, the SER scraper is decent, open it options and tools. Overall tho have GSA scrape it’s own targets is quite inefficient vs just having site list ready for it to churn through.

Scrapebox works for this running along side GSA.

Hi Jacob thanks for your advice. You say: “Try running GSA SER site detector, that thing hauls ass.Then go to the site list folders and paste those into UD.”

1. I have not yet GSA SER, but If this is that great for site detection why are you not doing this yet? Or is this the way you are detecting sites first now, like you gave as advice?

“I run on a 5gb ram vps, runs smooth as butter. But 20k urls will take several hours. This is why I’ve run two copies simultaneously [u]before{/u},”

2.So you are not using UD anymore for site detection? And you are using GSA SER site detector first?

First you say that you use UD for site detection and then you say that you use GSA for site detection and then put those in UD. I just want to be sure about things.

3. I will buy GSA SER somewhere in the upcomming days via your affiliate links, because I need it for some linkbuilding. But I just want to be sure about the site detection thing.

I use both GSA and UD site detector, not an exact science, but GSA handles most of the platforms UD does so you can detect sites in GSA and only process the successful sites in UD.

What should I do if the test result is proxies: ERROR TIMEOUT and ERROR 404?

I beg the best solution and what to do …

Wait a few hours, turn down your connections or threads, you need to find the sweet spot between the number of proxies and number of connections. At least 1:10. If that doesn’t work contact support and yell at them a bit.

Hi Jacob,

I bought GSA via your aff. link and tested it. The results are very very dissapointing. Maybe I did something wrong, so I made a printscreen: http://i.imgur.com/iFAyxlY.png

I imported all my scraped urls (100.000+) in GSA and GSA made from this a file called ‘sitelist_indexer-whois or statistics’ with ~1400 urls.

I imported those files in UD and received allmost no succeses. So this is clearly not gonna work.

So did I something wrong or it just not working? Looking forward to a response from you.

Argh, GSA definitely has a steep learning curve, but the site detector should be working. So you did a detection run, ticked the save to file then ran those in a task?

Hi Jacob,

yes I think I did everything like you told me. Maybe I can watch with teamviewer for a couple sec. So im 100% sure that I do everything alright?

Hi Jacob! Thanks for the article. I will be trying your preferred vendor. I am trying today instantproxies – have you tried them before? They had a really good price offering. Either way I will test it and let you and your community know.

Justin

Do their proxies work for registering for classifieds?

Is there a specific one that does that?

Yup they should work just fine.

Hi Jacob,

Another great post, can i just point out a small error.. You referred to the 2nd and 3rd place finishes in seconds, should it not be minutes?

Anyway, keep the posts coming.

I took your advice and purchased squid proxies. I have been trying to get them to work since I purchased them. They fail constantly support keeps sending me new proxies but I have literally not been able to run any tools for the past 5 days because I can’t get the proxies to stay alive for more than a few minutes. I am assuming that I am doing something wrong since there is now way squid can be this bad if your are endorsing them. Are there any things not to do when using these private proxies?

How many proxies are you using and active threads? Also is there a delay set?

I just purchased 10 PRIVATE ones, but they seem to be a joke. What a waste of 20$ ..Please edit your tutorial Jacob, before more people get screwed.

Hey Backy, how many connections do you Scrapebox set on? Under settings, maximum connections.

Hello Jacob,

I’m quite a newbie and I just want to ask for your recommendation about proxies. I currently have 50 semi-dedicated proxies from buyproxies. I’m using all my proxies to blast links on GSA SER.

What do you think is the best proxy provider for spamming GSA SER? Buyproxies or Squidproxies?

I heard that squidproxies is faster, but because it’s faster, the proxies are easily banned by Google. I need your help please. Thanks in advance!

Hello Jacob,

Thanks for running the test. I signed up with Squidproxies a week ago and never fot it to work. out of the 25 PRIVATE proxies between 5 and ten either timed out or were banned by google (302 error) before the first scrape session. the tech support was fast and provided new proxies however I wanted to pay fo proxies so I save time compared to public ones… in the end I spend more time mailing with the support as I spend with harvesting public proxies before.

I will try out buyproxies now… can’t be worse than my experience with squidproxies!

Well, please post back with your experience. Maybe I need to revisit this, quality control from Squidproxies seems to be slipping.

Hey Jacob I know this is a little bit of an old post but I was curious did you get shared proxies or private from the providers you mentioned? I had some shared proxies from buyproxies.com and without even doing any really crazy web surfing the speed dropped down to a crawl before my first month was up, I’m gonna switch to Squidproxies I think especially with the info you posted they seem to actually have their crap together

I’ve always done private personally.

Hi,Jacob

What is the need of speed in proxy and how count bandwith

Well it determines how long your scraping is going to take and success rate of software submissions, speed that is.

Hi Jacob,

I took your advice, bought the proxies from Squidproxies, and … cursed the day I did so. None of them is working. I tested them on Scrapebox. They return 403 errors. It’s been two days and they have not responded to my support ticket.

Yeah apparently they have been out to lunch on the quality control department. Will be updating this post soon, did you bitch them out for a refund or get a working set?

Hey Jacob,

Re-check your proxy pricing. These guys charge a whole lot more. You might want to update your pricing or ask “why” almost every proxy business just increased their prices across the board.

Thanks for the heads up, this whole post needs an update.

PS guys,

I have been using EZProxies. 20 private proxies for 20 bucks. Their customer service is slow as shit and you need to do a monthly test on your proxies because at least 2 of them will be dead each month. That being said, you need to grease the wheel but other than that, the speed was good.

Ya Jacob, I’m getting shit on with squidproxies too. Its a mess. Purchased 25 private proxies, and 2 of them will actually work. You should def. change up your recommendation.

I’ve requested new sets of proxies 2 times now with support. To their credit they’ve been highly responsive, but each time they just give me another list of 25 shitty proxies that don’t work. This is like paying for private proxies and getting public ones.

They recently got hacked, I don’t know if that is screwing anything else up for them.

Yup fuck them, looks like Buyproxies is taking the crown by default of Squidproxies just sucking major dick as a company. Terrible consistency and they just don’t give a fuck either.

True story. Cancelled my order with Squidproxy today.

How can I know how many proxies I will need? Sorry I’m kinda new

For light scraping 25 will do.

For light GSAing, 50 will do.

Hi,

How do these proxies compare to Proxyhub?

I’m stuck between BuyProxy as your now recommended one and ProxyHub.

Hey Jacob,

i am a complete newb when it comes to Scrapebox. i just started and did around 10 queries for diffferent terms trying to pix up some sites with PR. anyway, all my 22 proxies got banned after just that. its really frustrating because i thought having 22 private ones would last a long time.

anyway i was recommended in a video to do this search:

1000 results

standard proxy options (i got 22)

scraping google and yahoo

it went wrong after checking PR for the 10th time.

How can i make sure i dont get banned and how many proxies will i need to be serious about scraping? like say nonstop for 6 hours etc.. imagine i will be doing it a lot!

Adjust your connection settings, they might be really high for that # of proxies.

You might need to go down to like 2 connections or something small, it should be about 1:10 proxy to connection ratio. Sounds like your power is just turned up way too high and burned out the proxies instantly.

I tried out Poweruphosting today. Horrible experience. At least 1/3 of the proxies were dead altogether (no response whatsoever), another 3 or 4 seemed to be banned already. I went into the live chat and got zero help, simply a response to open a ticket. So, I did, and got what amounted to an “oh, well *shrug*” response.

I guess on to the next one…

Well that sounds shitty, I’ll hit them up for you.

Don’t bother. I already canceled and found a different service. I just figured you’d want to know.

Alright, well I will definitely ask them whats up, they are supposed to be providing consistent quality.

@Jacob

Firstly web scrapping is just one of the use of proxies. While reviewing you should also include features such as 24/7 customer support, how userfull the control panel is, how easy is it to refresh/refresh new IPs, what’s the IP quality ( if virgin/used), number of subnets and the speed.

Speed offcourse is a important element but rest of what I mentioned are important as well. There are companies which either sell a single net range for the same price while other sell mixed ones.

You should also check limeproxies.com which is aimed at enterprise quality proxies offering a SLA and custom speeds of upto 10gbps.

Very true, proxies do have several other functions.

For me it’s running web scrapes or submitting/creating accounts. I think I reached out to you guys in the past about testing your proxies and forming a partnership, not sure what happened. I don’t know if that cost can be justified though, also are you cool with software like GSA search engine ranker? I think that might be the reason I never tried you guys.

Hi Jacob

I’m kind of new on this SEO stuff but learning a lot from you and others as well, thank you!

I have some questions if you dont mind:

Does using a VPN like “hide-my-ass” could work the same as changing proxies?

Have you heard about Cloud BPN? If you have…what do you think about it?

and last: I’m just starting and I think I have a pretty good picture about creating a BPN but I’m

terribly scared about leaving footprints…from all the softwares you recommend which one you

think I should buy for this purpose?

Thanks so much

Yeah you might want to pump the breaks then, cloudpbn is some management tool made by some complete douche bag who is associated with Alex Becker who is quite possibly the king of all the douche bags.

Hi Kind Sir Jacob,

I was wondering if you would be able to guide a noob as how to set threads/html timeout accordingly in GSA ser and scrapebox. i will be buying 20 semi dedicate proxies most likely from buy proxies because i tried cheap private proxies and their service was doo doo. responses would take around 24 hours just to tell me hey the proxies are workin on my side even though my gsa ser and scrapebox was telling me only 8/20 proxies were working and everything else was 404 or not working status. I asked for refund and they took around 5 usd off for themselves so for 2 days they made that of me. But enoguh about those crooks lol i am interested in your help if say i buy 20 proxies from buy proxies what would i set the threads to and html time out to because before i had put it at 110 threads and 105 html timeout(keep in mind im a noob here) so what would you recommend as how to go abouts. for scrapebox i havent even used it once yet for a project just used the proxy checker tool when i had those previous proxies. But yeah i have around 4 sites that i want to build on now..3 of em ive set up with 3 tiers each so what would you recommend kind sir? thanks in advance!:)

Depending on what you’re doing, try 2:1 proxies to connections with SB, so 20 proxies run on 10 connections.

GSA on 20 proxies like 1:3, you could run around 60 threads.

Thanks Jacob!

I have 3 projects that Im running now on GSA and ive set them all up as 3 tiers projects. I just purchased Scrapebox as well so Im trying to learn that now a bit and get one or two more projects going with 3 tiers as well. I just want to use the 20 proxies for link building and probabely scraping proxies as well but I want to be on the safe side for this. So if I am running 5 projects for example on both GSA and Scrapebox would I set both to say 50-60 threads and around 30 html timeout or what would you recommend. Again, I am still a noob so sorry if I am leaving anything else out.

Those settings sound good but I wouldn’t run both at the same time, not enough proxies. And if I had more I’d either split them or wait until GSA was finished running the submissions. For SB try it on really low connections like 2 or 3 and move it up if the proxies can handle it. I think I run it at 5 connections with 50 proxies.

thanks again Jacob!

Im about to buy some proxies now and start playing around :)

Hey Jacob I have a quick question as im new to the world of proxies.

Lets say that I want to enter in email addresses on my own squeeze page using aweber and want to avoid proxy detection from them, what type of proxies would be best for my purpose? Free, shared, semi-dedicated, or elite/private proxies?

Any kind, just make sure you have it enabled properly in your browser. Then visit Google and type “what is my IP” see your home or the proxy? Good to go.

Do you have any reviews for newipnow.com ?

Nah haven’t used them.

Good to know that!

Hey Jacob, do you know which of the services above will be the one with the most unique states and unique cities with the most variety of NON sequential ips? I’m looking for proxies everywhere in the USA and top tier 1 countries such as uk, ca, nz , au. If they don’t have top 5 countries I’m okay with just the USA as long as it has a variety of non sequential.

From newipnow.com I got nothing but sequential ips that died couple days later even though I never had a chance to use them.

Doesn’t matter if they are semi dedicated or private I just need something that will have a good variety of non sequential. I’ve noticed that a lot of the providers have them in sequential when it comes to proxies from the same state and city. A bunch of sequential ips visiting a site looks too much like a bot and that’s not what I want, so if you know of any of the above services that can get me what I need let me know which one.

Thanks :)

Hi Jacob. I was fortunate enough to be granted access to 1000 private squid proxies, and never had any issues with them. On the rare occasions I got an error after checking in ScrapeBox (less than 5% dropout) a retest minutes later would show 100% working fine. That said, to be on the safe side I always kept my number of threads way down, and used the random delay as well. No sense burning them out.

For use outside of automation tools, when routing my web browser through a proxy I found certain sites (typically those where I wanted to create an account) problematic. On those occasions I found a SOCKS5 proxy seemed to give me a better experience.

There’s no shortage of software available to get your PC / browser hooked up, such as Proxifier which can assign a given proxy to specific situations. Many browsers do support proxies anyway, this just makes them easier to configure. Of course if Firefox is your weapon of choice there are plenty of proxy management add-ons. Also take a look at FoxyProxy as they provide a pretty cool little management tool for Firefox, Chrome and IE. HTH.

Sweet info, thanks for dropping in Chris.

Hi Jacob,

I’m all new in proxy but took it on the nose I used your link to get 10 semi @Powerhosting

in return for a super article and service here!

So… Being new …How do I actually set them up…(didn’t get anything yet except the invoice)

Is it a set of IP’s to enter in my browser or direct into my SEO tool? (Why not both) :)

Or do I use one of them for ACCESS and 9 for rotation?

Have a super day

Peter

I had good results with ipfreelyproxies.net from Scrapebox.

Hi, Jacob. Thanks for good article. I want to buy private USA proxy on website http://buy.fineproxy.org/eng/individualproxy.html. Here offer to buy private proxy for 30 days for $5 (1 IP). What do you think about whether to agree to this proposal?

That sounds awful, that would be $50 for 10 proxies lol. Try Squidproxies.

If I take squid proxies then does it mean I will be scraping Google Us data?

I want to scrape data from India .

Thanks

No, just set scrapebox to the proper Google you want to hit.

You can select which Google to scrape, add google.co.in

Hi Jacob,

I am quite impressed to see bunch of question answers in a single rack what I was looking for. Thanks for helping us by sharing your knowledge!

My scenario is little bit different.

I buy/book online tickets/stocks/token from different sites. Some of those web servers are really busy when they open their gateway. They banned often from those sites because of persistent submission of web form. (They might think me as a DDoS program)

My plan is to run 20 threads, for 100 form submission in each thread (2000 submission with in 10 minutes).

Can you suggest me how many submission per minute can be safe to avoid this issue? and how many proxy should i use?

I know it’s difficult, but i want to make it happen!

Regards,

Marke

Hrmm that sounds like quite a bit of activity. I run GSA like 50 threads with 50 proxies.

Not exactly sure about the software you’re running but I’d say at least 50-100, try private if you’re having issues with the throttling on shared.

Thanks man, I was trying to do some SEO for my Showbox app download website and when it came to buying proxies, I just got confused. There are so many sites and all claims to be best. Let me try Squidproxies.com, hope it is what I need.

Hi Jacob, We would love you to try our private proxy service !

With down to 0.4$ per private proxy

Contact us to get your free trial

No